Future AI Guide

The Ultimate AI Resource Hub

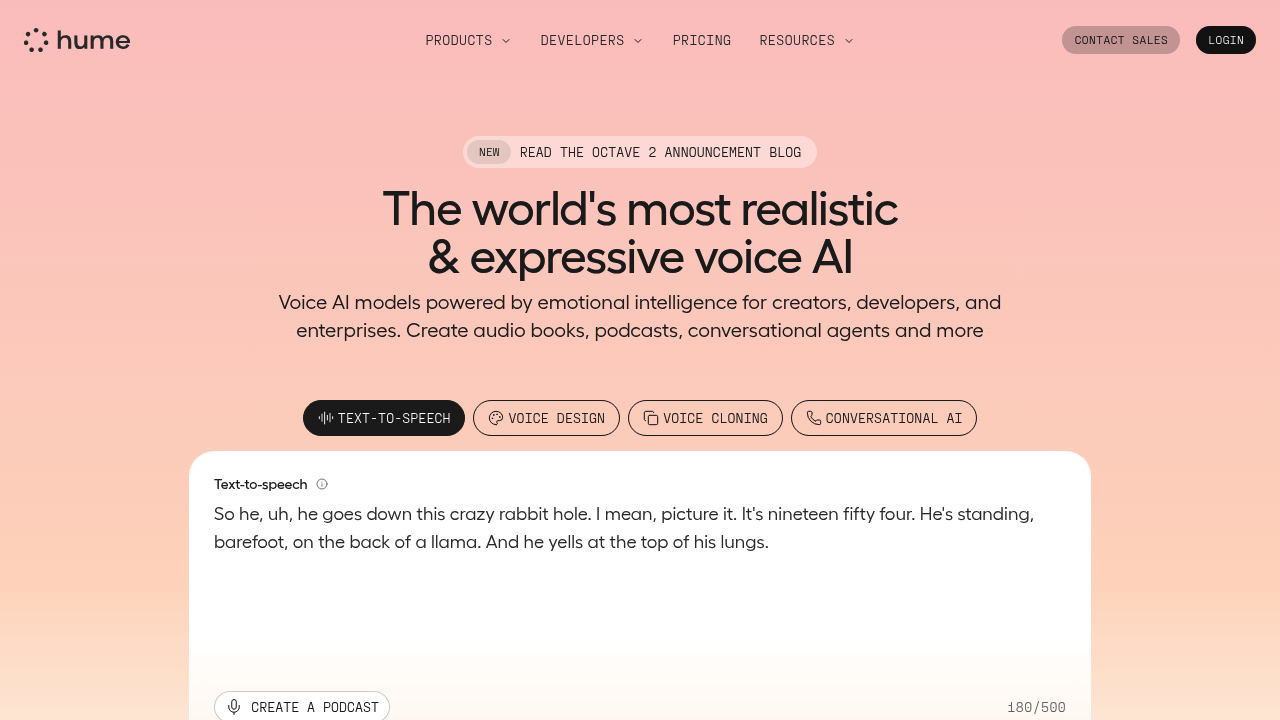

Hume

Hume AI: Voice interactions powered by emotional intelligence for human connection.

Hume – Emotion AI, Voice-Based Understanding, and Empathic Human–AI Interaction

Hume was created to bridge the gap between human emotional expression and artificial intelligence. Traditional AI systems excel at processing language and data but struggle to understand tone, emotion, and nuance—the core elements of human communication. This limitation affects customer support, coaching, mental health tools, conversational AI, and any interaction requiring empathy or emotional awareness.

Hume solves this challenge with Emotion AI: models that analyze voice, speech patterns, and text to detect emotional states. Its empathic listener APIs and real-time emotion streaming help applications respond not only to what users say, but how they say it. Hume enables more authentic, supportive, and adaptive human–computer experiences.

Key Features

- Empathic Voice AI: Detects emotional expression from tone, pitch, and speech rhythm.

- Emotion Measurement: Continuous measurement across emotional dimensions such as joy, fear, sadness, confidence, etc.

- Real-Time Streaming: Analyze user emotions live during calls or conversations.

- Voice-to-Voice Interaction: Build AI agents with emotionally aware responses.

- Text Emotion Analysis: Understand sentiment and affect from written text.

Pros

- Deep emotional understanding unmatched by traditional sentiment tools.

- Real-time emotion streaming for live experiences.

- Clear APIs for developers integrating emotion AI.

- Enables tools that respond empathetically rather than mechanically.

Cons

- Emotion analysis depends heavily on audio quality.

- Not suitable for purely technical Q&A without emotional context.

- Regulatory and ethical considerations require careful handling.

- Advanced features may require enterprise-level plans.

Pricing

Hume offers several options:

-

Free Tier

Limited requests and basic emotion analysis for prototypes. -

Developer Plan

Higher request limits, streaming access, and priority latency. -

Business & Enterprise Plans

Advanced throughput, SLA guarantees, custom integrations, on-premise deployment, and enterprise-level privacy controls. -

Usage-Based API Pricing

Charged per second of audio or per emotion analysis call.

Exact pricing varies depending on API volume and latency requirements.

Who Is Using This Tool?

- Customer support teams improving call experiences with emotion tracking.

- Coaching & therapy platforms enhancing empathy and personalization.

- Conversational AI companies building emotionally aware agents.

- Education platforms understanding student frustration or engagement.

- Call centers monitoring tone and sentiment to improve service quality.

- Researchers studying human emotional expression.

Any industry requiring high-touch, human-like communication can benefit.

Technical Details

Emotion AI Engine

Hume uses multi-dimensional emotional modeling based on:

- prosody recognition (tone, pitch, speed)

- vocal acoustic analysis

- semantic interpretation

- context modeling

The engine outputs continuous emotional scores rather than simplistic positive/negative sentiment.

Emotion Expressions

Hume maps emotions across dimensions such as:

- joy

- anger

- sadness

- insecurity

- calmness

- curiosity

- engagement

These signals update in real time as speech continues.

Real-Time Streaming API

Allows developers to:

- stream voice data

- receive emotional signals every few milliseconds

- trigger adaptive responses in an AI agent

Security & Privacy

Hume supports:

- encrypted audio streaming

- no data training without explicit consent

- enterprise-grade compliance controls

The User Experience

Ease of Use

- Simple API for voice or text emotion analysis.

- SDKs and dashboards for monitoring emotional signals.

- Easy integration into chatbots, call systems, or apps.

Accessibility

- Cloud-based, with low-latency streaming.

- Works with microphones, phone calls, or audio recordings.

- Supports multiple programming languages.

Workflow

- Capture user audio or text.

- Send data to Hume’s Emotion API.

- Receive emotional scoring and expression insights.

- Adapt the AI response or interface based on user emotion.

- Improve user experience through real-time empathy.

Summary

Hume enables AI systems to interpret and respond to human emotion, making digital interactions more natural, empathetic, and supportive. With real-time emotional intelligence, it transforms customer service, coaching, mental health tools, conversational agents, and any experience where understanding feelings matters. Hume is redefining what emotionally aware technology can achieve.

Related Tools

- Ellie.ai – Mental health conversational AI with emotion detection.

- Cerebras Voice Models – Advanced speech and vocal generation.

- AssemblyAI – Audio intelligence including emotion classification.

- Deepgram – Speech recognition with sentiment modules.

- Sonantic – Emotion-rich voice synthesis for entertainment.

AIVA

AIVA

Coqui

Coqui

ElevenLabs

ElevenLabs