The Babysitting Tax (a.k.a. The Work AI Adds)

“Babysitting” sounds flippant until you do it all day. The tax shows up as a specific set of hidden tasks that eat away at your day:

Prompt Churn: Clarify constraints. Re-clarify when half are ignored. Re-clarify again when the tone is wrong.

Verification: Do the citations exist? Do the tests pass? Does the SQL actually fit the schema? (Knowledge workers now spend ~4.3 hours a week just fact-checking AI.)

Repair: Fix the confident-but-wrong parts. Remove filler. Untangle apology loops.

Context Re-injection: Remind the model of decisions it should have retained three turns ago.

Version Thrash: Compare outputs, rerun prompts, then realize you could’ve drafted it faster yourself.

Across domains, this isn’t rare. In 2025, 66% of developers reported spending more time fixing AI code than they saved generating it. And here’s the kicker: the more confident the model sounds, the harder it is to spot subtle errors. You must actually think, not skim.

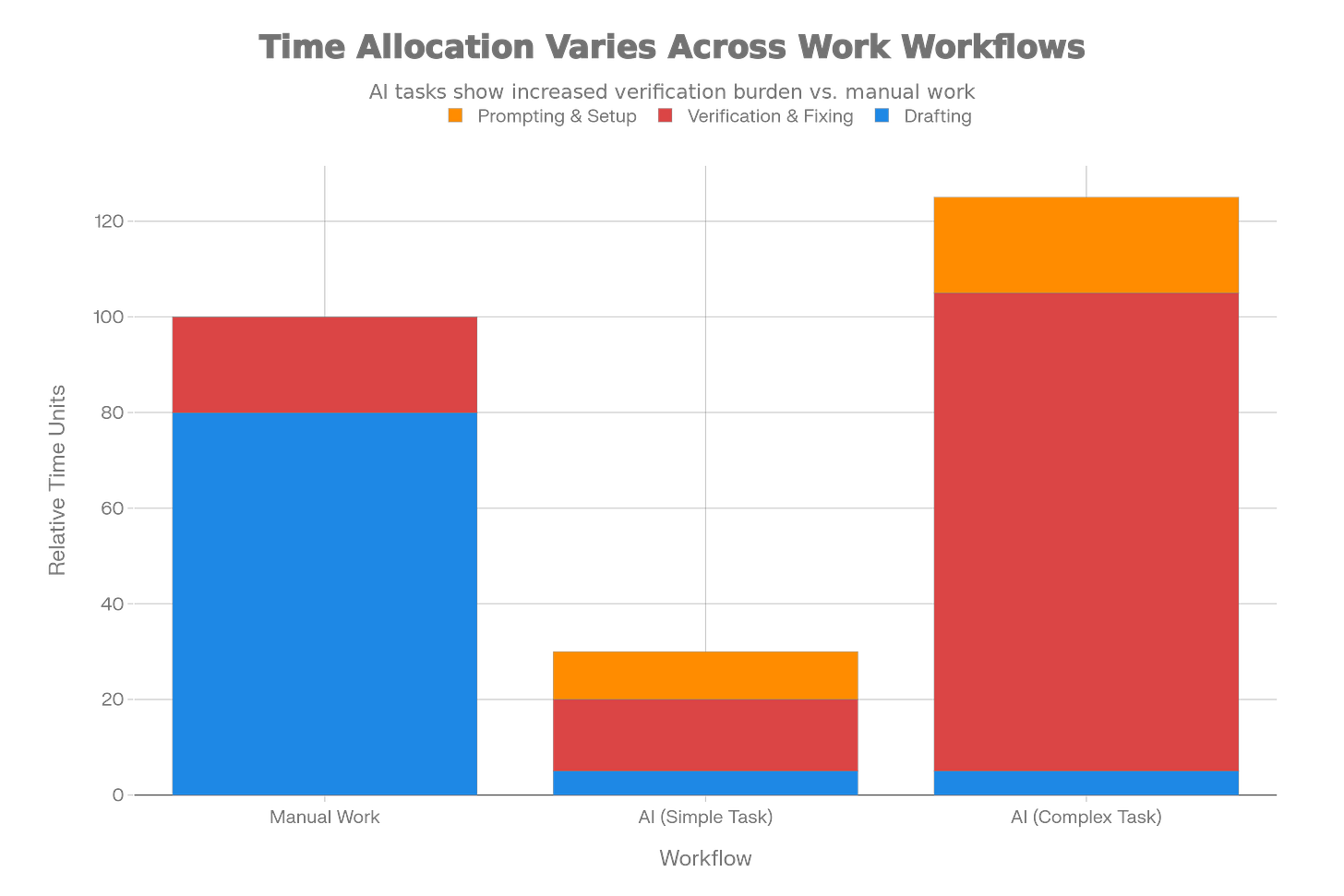

The ‘Babysitting Tax’ shifts effort from drafting to verifying. While AI dramatically reduces drafting time, complex tasks often require disproportionate verification time, resulting in a net loss of productivity.The Jagged Frontier: Why Judgment Breaks First

Researchers at Harvard Business School and Boston Consulting Group popularized the idea of the Jagged Frontier: AI capability isn’t smooth. It’s spiky.

On some tasks, AI is flawless. On others, it confidently derails. The danger zone is when the task feels hard—because that’s where we’re most tempted to over-trust a plausible answer.

In their study of complex problem-solving outside AI’s comfort zone, professionals using AI were 19 percentage points more likely to produce incorrect solutions than peers working solo. Not because they were careless—but because the authority signal of a well-formatted answer short-circuited skepticism.

More “reasoning” doesn’t always help. Paradoxically, newer reasoning models (like o3 or o4-mini) can have higher hallucination rates (33–48% on specific benchmarks) than simpler models because each step of “thought” introduces another potential failure point. The result can be elegant nonsense.

Expectation vs. Reality: A 2025 study of experienced developers found that while they expected AI to save time, it actually increased task completion time for complex work due to the overhead of reviewing and fixing code

Where AI Actually Wins (Clean, Bounded Zones)

AI shines when verification is cheap. These are the safe zones:

Pattern-heavy work: Reformatting data, translating between languages, boilerplate transforms.

Brainstorming: Idea volume matters; filtering is fast.

Low-stakes drafts: Internal notes, social captions, email templates.

Constrained summaries: Extract specifics you can instantly compare to a source.

The common thread: you can eyeball correctness in seconds.

Where Productivity Quietly Collapses

It collapses when checking ≈ doing:

High-cost errors: Legal, medical, financial, safety-critical work. Being wrong has teeth. (Legal AI hallucination rates sit around 6.4%, which is terrifying for case law.)

Deep context tasks: Architecture, policy, sensitive HR, nuanced sales. You’ll spend more time explaining context than writing.

Ambiguous requirements: If you don’t know what “right” looks like, the model won’t either.

Almost-right outputs: The most expensive kind. They look fine until they’re not.

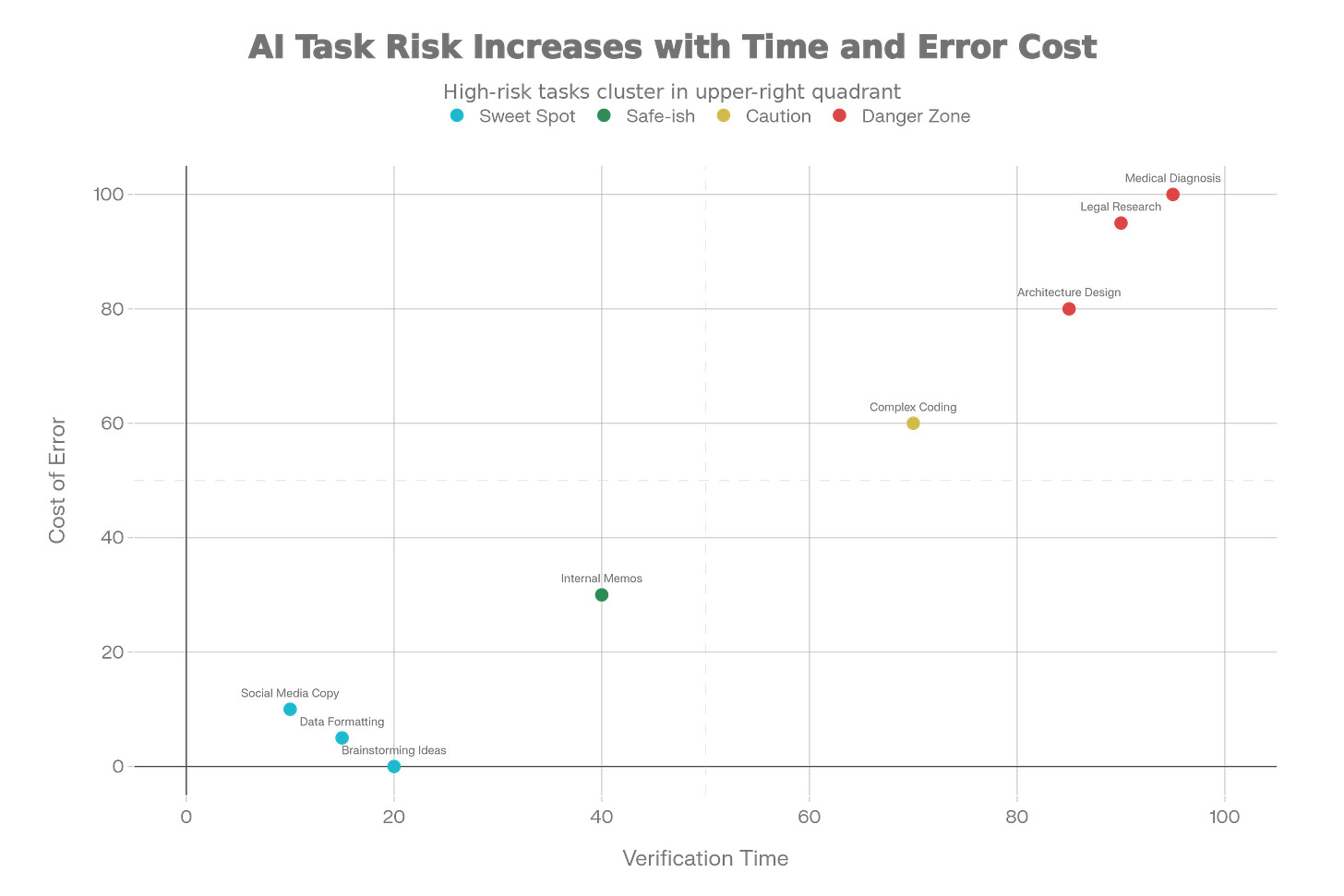

The AI Delegation Matrix helps identify which tasks are safe for AI. Tasks in the bottom-left (Low Risk, Fast Verify) are the ‘Sweet Spot’. Tasks in the top-right (High Risk, Slow Verify) are the ‘Danger Zone’ where manual work is preferred.

Why The Speed Illusion Is So Convincing

Four forces keep the myth alive:

The vibe of motion: Watching text appear feels like progress.

Wrong metrics: “Time to first draft” isn’t “time to ship.”

Short trials: Friction compounds over weeks, not minutes.

Survivorship bias: Wins get posted. Losses get buried.

The METR result matters because it measured real work over real time, not a demo loop.

The Honest Mental Model

Before AI: you typed, you knew why, you owned correctness.

With AI: it types, you infer intent, you own the risk.

That trade can be great—or awful—depending on oversight cost. The best operators don’t trust AI more. They scope it ruthlessly, like a confident intern who never says “I’m not sure.”

A Simple Test Before You Delegate

Ask three questions:

How expensive is a wrong answer?

Trivial → fine. Moderate → verify. Catastrophic → don’t delegate.

Does checking take <50% of doing?

If not, you’re paying a supervision tax.

Would you trust a fast junior unsupervised?

If no, AI won’t magically earn that trust.

Fail any one, and you’re in babysitting territory.

Bottom Line

AI can absolutely boost productivity—but only where oversight is cheap and mistakes are survivable. For complex, high-stakes, context-dense work, doing it yourself is often faster than supervising a model.

The future isn’t “AI everywhere.” It’s discernment: knowing what to delegate, and having the discipline to just do the rest—without the overhead of prompting, verifying, and repairing.

The universe is strange. Productivity is stranger. And the fastest-looking path is not always the shortest one.

Sources & Further Reading

METR Study (2025): “Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity.” Found a ~19% slowdown for developers using AI on complex tasks.

Harvard/BCG Study: “Navigating the Jagged Technological Frontier.” Documented how high-performing consultants performed worse on complex tasks when using AI.

Index.dev Report: “Developer Productivity Statistics with AI Tools.” Noted that 66% of developers spend more time fixing AI code than they saved.

AI Hallucination Data (2025): Recent benchmarks show hallucination rates of 6.4% in legal and up to 48% in complex reasoning tasks.

Data and insights synthesized from 2024-2025 field research on AI productivity and human-computer interaction. Study (2025): “Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity.” Found a ~19% slowdown for developers using AI on complex tasks.metr+1

Harvard/BCG Study: “Navigating the Jagged Technological Frontier.” Documented how high-performing consultants performed worse on complex tasks when using AI.forbes

Index.dev Report: “Developer Productivity Statistics with AI Tools.” Noted that 66% of developers spend more time fixing AI code than they saved.askflux

AI Hallucination Data (2025): Recent benchmarks show hallucination rates of 6.4% in legal and up to 48% in complex reasoning tasks.drainpipe+2

Data and insights synthesized from 2024-2025 field research on AI productivity and human-computer interaction.

https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/

https://drainpipe.io/the-reality-of-ai-hallucinations-in-2025/

https://www.askflux.ai/blog/ai-generated-code-revisiting-the-iron-triangle-in-2025

https://www.aboutchromebooks.com/ai-hallucination-rates-across-different-models/

https://www.nytimes.com/2025/05/05/technology/ai-hallucinations-chatgpt-google.html